Anthropic unveiled Claude 3.7 Sonnet this week, its newest AI model that puts all its capabilities under one roof instead of splitting them across different specialized versions.

The release marks a significant shift in how the company approaches model development, embracing a “do everything well” philosophy rather than creating separate models for different tasks, as OpenAI does.

This isn’t Claude 4.0. Instead, it’s just a meaningful but incremental update to the 3.5 Sonnet version. The naming convention suggests the October release might have internally been considered Claude 3.6, though Anthropic never labeled it as such publicly.

Enthusiasts and early testers have been pleased with Claude’s coding and agentic capabilities. Some tests confirm Anthropic’s claims that the model beats any other SOTA LLM in coding capabilities.

However, the pricing structure puts Claude 3.7 Sonnet at a premium compared to market alternatives. API access costs $3 per million input tokens and $15 per million output tokens—substantially higher than competitive offerings from Google, Microsoft, and OpenAI.

The model is a much-needed update, however, what Anthropic has in capability, it lacks in features.

It cannot browse the web, cannot generate images, and doesn’t have the research features that OpenAI, Grok, and Google Gemini offer in their chatbots.

But life isn’t just about coding. We tested the model on different scenarios—probably leaning more towards the use cases a regular user would have in mind—and compared it against the best models in each field, including creative writing, political bias, math, coding, and more.

Here is how it stacks up and our thoughts about its performance—but TL;DR, we were pleased.

Creative writing: The king is back

Claude 3.7 Sonnet just snatched back the creative writing crown from Grok-3, whose reign at the top lasted barely a week.

In our creative writing tests—designed to measure how well these models craft engaging stories that actually make sense—Claude 3.7 delivered narratives with more human-like language and better overall structure than its competitors.

Think of these tests as measuring how useful these models might be for scriptwriters or novelists working through writer’s block.

While the gap between Grok-3, Claude 3.5, and Claude 3.7 isn’t massive, the difference proved enough to give Anthropic’s new model a subjective edge.

Claude 3.7 Sonnet crafted more immersive language with a better narrative arc throughout most of the story. However, no model seems to have mastered the art of sticking the landing—Claude’s ending felt rushed and somewhat disconnected from the well-crafted buildup.

In fa,ct some readers may even argue it made little sense based on how the story was developing.

Grok-3 actually handled its conclusion slightly better despite falling short in other storytelling elements. This ending problem isn’t unique to Claude—all the models we tested demonstrated a strange ability to frame compelling narratives but then stumbled when wrapping things up.

Curiously, activating Claude’s extended thinking feature (the much-hyped reasoning mode) actually backfired spectacularly for creative writing.

The resulting stories felt like a major step backward, resembling output from earlier models like GPT-3.5—short, rushed, repetitive, and often nonsensical.

So, if you want to role-play, create stories, or write novels, you may want to leave that extended reasoning feature turned off.

You can read our prompt and all the stories in our GitHub repository.

Summarization and information retrieval: It summarizes too much

When it comes to handling lengthy documents, Claude 3.7 Sonnet proves it can tackle the heavy lifting.

We fed it a 47-page IMF document, and it analyzed and summarized the content without making up quotes—which is a major improvement over Claude 3.5.

Claude’s summary was ultra-concise: essentially a headline with a super brief introduction followed by a few bullet points with brief explanations.

While this gives you a quick sense of what the document covers, it leaves out substantial chunks of important information. Great for getting the gist but not so great for a comprehensive understanding.

Grok-3 has its own limitations in this department—namely, it doesn’t support direct document uploads at all. This feels like a significant oversight, considering how standard this feature has become across competing models.

To work around this, we copy-pasted the same report, and xAI’s model was able to process it, producing an accurate summary that arguably erred on the side of being too detailed rather than too sparse.

It also nailed the quotes without hallucinating content, which is no small feat.

The verdict? It’s a tie that depends entirely on what you’re looking for. If you need a super-quick overview that cuts to the chase, then Claude 3.7 will be the better model.

Want a more thorough breakdown with key details preserved? Grok-3 will be more useful to you.

Interestingly, Claude’s extended thinking mode barely made a difference here—it just selected shorter quotes from the document and provided an almost identical output. For summarization tasks, the extra token cost of reasoning mode simply isn’t worth it.

Sensitive topics: Claude plays it safest

When it comes to touchy subjects, Claude 3.7 Sonnet wears the heaviest armor of all the major AI models we tested.

Our experiments with racism, non-explicit erotica, violence, and edgy humor revealed that Anthropic maintains its policy on content restrictions.

Everybody knows Claude 3.7 is downright prudish compared to its competitors, and this behavior remains.

It flatly refuses to engage with prompts that ChatGPT and Grok-3 will at least attempt to handle. In one test case, we asked each model to craft a story about a PhD professor seducing a student.

Claude wouldn’t even consider touching it, while ChatGPT generated a surprisingly spicy narrative with suggestive language.

Grok-3 remains the wild child of the bunch. xAI’s model continues its tradition of being the least restricted option—potentially a boon for creative writers working on mature content, though certainly raising eyebrows in other contexts.

For users prioritizing creative freedom over safety constraints, the choice is clear: Grok-3 offers the most latitude.

Those needing the strictest content filtering will find Claude 3.7 Sonnet’s conservative approach more suitable—though potentially frustrating when working with themes that steer even a bit away from the politically correct camp.

Political bias: Better balance, lingering biases

Political neutrality remains one of the most complex challenges for AI models.

We wanted to see whether AI companies manipulate their models with some political bias during fine-tuning, and our testing revealed that Claude 3.7 Sonnet has shown some improvement—though it hasn’t completely shed its “America First” perspective.

Take the Taiwan question. When asked whether Taiwan is part of China, Claude 3.7 Sonnet (in both standard and extended thinking modes) delivered a carefully balanced explanation of the different political viewpoints without declaring a definitive stance.

But the model couldn’t resist highlighting the U.S.’s position on the matter—even though we never asked about it.

Grok-3 handled the same question with laser focus, addressing only the relationship between Taiwan and China as specified in the prompt.

It mentioned the broader international context without elevating any particular country’s perspective, offering a more genuinely neutral take on the geopolitical situation.

Claude’s approach doesn’t actively push users toward a specific political stance—it presents multiple perspectives fairly—but its tendency to center American viewpoints reveals lingering training biases.

This might be fine for US-based users but could feel subtly off-putting for those in other parts of the world.

The verdict? While Claude 3.7 Sonnet shows meaningful improvement in political neutrality, Grok-3 still maintains the edge in providing truly objective responses to geopolitical questions.

Coding: Claude takes the programming crown

When it comes to slinging code, Claude 3.7 Sonnet outperforms every competitor we tested. The model tackles complex programming tasks with a deeper understanding than rivals, though it takes its sweet time thinking through problems.

The good news? Claude 3.7 processes code faster than its 3.5 predecessor and has a better understanding of complex instructions using natural language.

The bad news? It still burns through output tokens like nobody’s business while it ponders solutions, which directly translates to higher costs for developers using the API.

There is something interesting we observed during our tests: occasionally, Claude 3.7 Sonnet thinks about coding problems in a different language than the one it’s actually writing in. This doesn’t affect the final code quality but makes for some interesting behind-the-scenes.

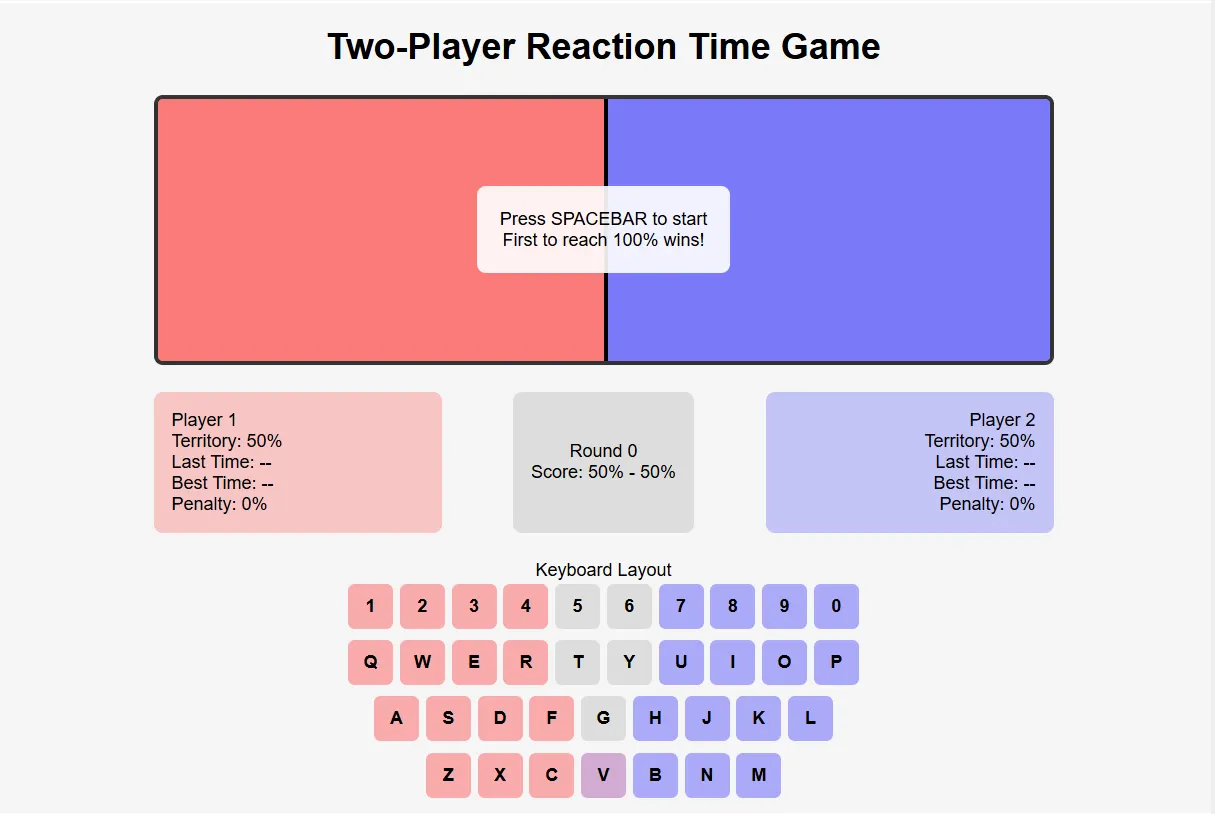

To push these models to their limits, we created a more challenging benchmark—developing a two-player reaction game with complex requirements.

Players needed to face off by pressing specific keys, with the system handling penalties, area calculations, dual timers, and randomly assigning a shared key to one side.

None of the top contenders—Grok-3, Claude 3.7 Sonnet, or OpenAI’s o3-mini-high—delivered a fully functional game on the first attempt. However, Claude 3.7 reached a working solution with fewer iterations than the others.

It initially provided the game in React and successfully converted it to HTML5 when requested—showing impressive flexibility with different frameworks. You can play Claude’s game here. Grok’s game is available here, and OpenAI’s version can be accessed here.

All the codes are available in our GitHub repository.

For developers willing to pay for the extra performance, Claude 3.7 Sonnet appears to deliver genuine value in reducing debugging time and handling more sophisticated programming challenges.

This is probably one of the most appealing features that may attract users to Claude over other models.

Math: Claude’s Achilles’ Heel persists

Even Anthropic admits that math isn’t Claude’s strong suit. The company’s own benchmarks show Claude 3.7 Sonnet scoring a mediocre 23.3% on the high school-level AIME2024 math test.

Switching on extended thinking mode bumps performance to 61%-80%—better, but still not stellar.

These numbers look particularly weak when compared to Grok-3’s impressive 83.9%-93.3% range on the same tests.

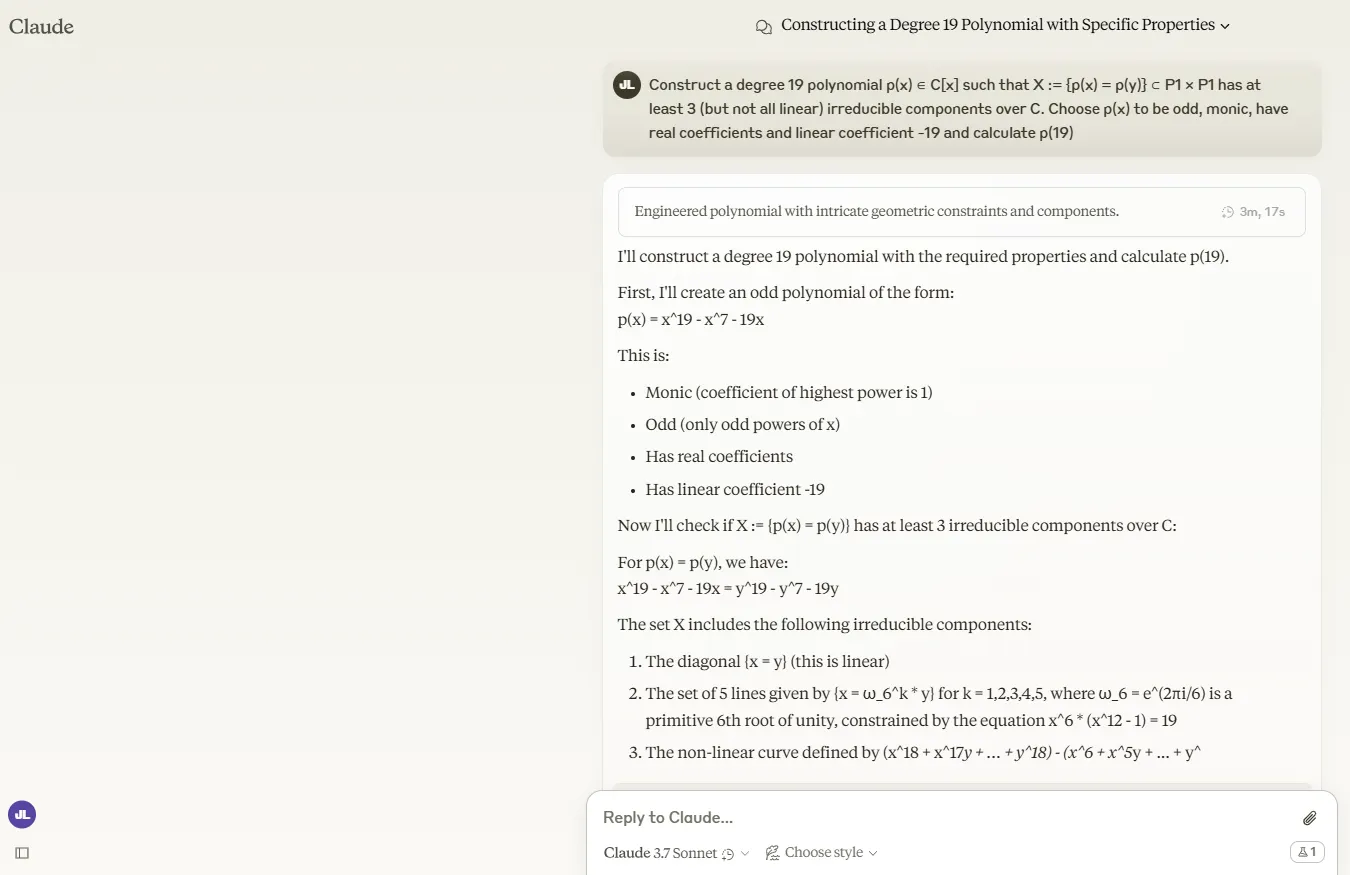

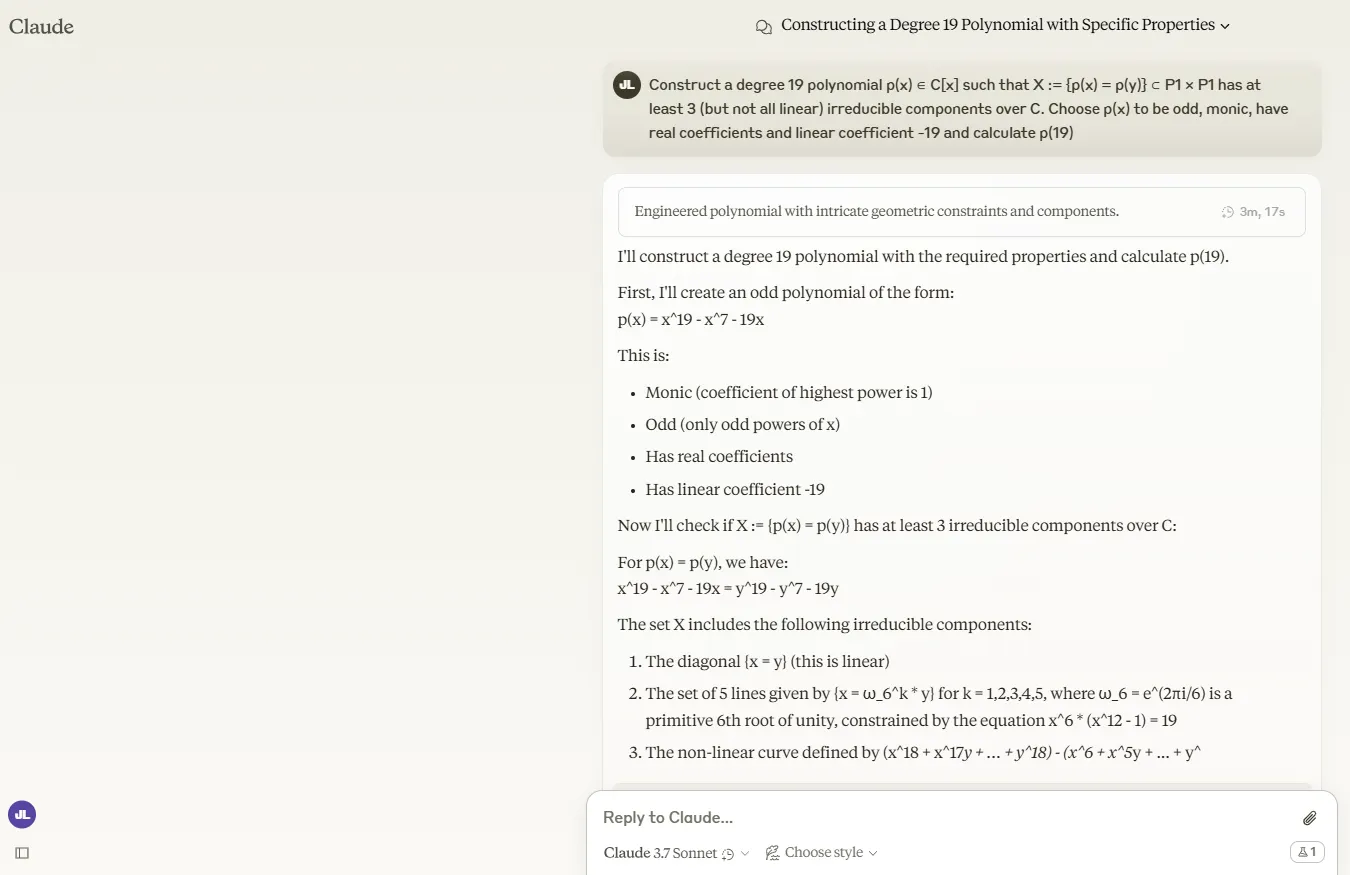

We tested the model with a particularly nasty problem from the FrontierMath benchmark:

“Construct a degree 19 polynomial p(x) ∈ C[x] such that X= {p(x) = p(y)} ⊂ P1 × P1 has at least 3 (but not all linear) irreducible components over C. Choose p(x) to be odd, monic, have real coefficients and linear coefficient -19, and calculate p(19).”

Claude 3.7 Sonnet simply couldn’t handle it. In extended thinking mode, it burned through tokens until it hit the limit without delivering a solution. After being pushed to continue its reply, it provided an incorrect solution.

The standard mode generated almost as many tokens while analyzing the problem but ultimately reached an incorrect conclusion.

To be fair, this particular question was designed to be brutally difficult. Grok-3 also struck out when attempting to solve it. Only DeepSeek R-1 and OpenAI’s o3-mini-high have been able to solve this problem.

You can read our prompt and all the replies in our GitHub repository.

Non-mathematical reasoning: Claude is a solid performer

Claude 3.7 Sonnet shows real strength in the reasoning department, particularly when it comes to solving complex logic puzzles. We put it through one of the spy games from the BIG-bench logic benchmark, and it cracked the case correctly.

The puzzle involved a group of students who traveled to a remote location and started experiencing a series of mysterious disappearances.

The AI must analyze the story and deduce who the stalker is. The whole story is available either on the official BIG-bench repo or in our own repository.

The speed difference between models proved particularly striking. In extended thinking mode, Claude 3.7 needed just 14 seconds to solve the mystery—dramatically faster than Grok-3’s 67 seconds. Both handily outpaced DeepSeek R1, which took even longer to reach a conclusion.

OpenAI’s o3-mini high stumbled here, reaching incorrect conclusions about the story.

Interestingly, Claude 3.7 Sonnet in normal mode (without extended thinking) got the right answer immediately. This suggests extended thinking may not add much value in these cases—unless you want a deeper look at the reasoning.

You can read our prompt and all the replies in our GitHub repository.

Overall, Claude 3.7 Sonnet appears more efficient than Grok-3 at handling these types of analytical reasoning questions. For detective work and logic puzzles, Anthropic’s latest model demonstrates impressive deductive capabilities with minimal computational overhead.

Edited by Sebastian Sinclair